Raspberry Pi 上で Hadoop を動かしてみる。Raspberry Pi は1台しかないので、PSEUDOで動かす。

準備

- Transcend SDHCカード 32GB Class10 購入

- JVMが動くので、Soft-float Debian “wheezy” を採用、ダウンロード:2013-05-29-wheezy-armel.img

- イメージをSDへコピーするときに「Win32DiskImager」を使う場合、エラーになるときがあるので、diskpartツールでパーティションを全部消してから

- Hadoop は 1.1.2 をダウンロード:hadoop-1.1.2.tar.gz

OS設定

- 初期起動のメニュー

Expand Filesystem Internationalisation Options -> Change Locale ja_JP.UTF-8 -> Change Timezone Tokyo -> Change Keyboard Layout : Generic 105-key (Intel) PC # Control+Alt+Backspace で X Serverを Terminateできるように Advanced Option -> CPUとGPUのメモリ配分を調節するとか

以降 # raspi-conf で呼び出せる

- OS起動後、設定

- 面倒なので sudo で root パスワードつける

- inittab で runlevel 3

- DHCPなのでStaticに変更する:/etc/network/interfaces

- apt-get update , apt-get upgrade

- 追加パッケージ:telnetd , vsftpd , sysv-rc-conf , tightvncserver , xrdp , dstat

- root 許可 (telnetd , vsftpd)

- apt-get update , apt-get upgrade

- rpcbind は有効にしておく

- NTP Client 設定

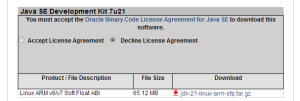

Oracle JDK インストール

- Open JDKでもいいんだが(そっちのが準備は楽)、Oracle JDK のがよさそうなので Oracle JDK 7 (ARM Soft Float ABI 用)を採用

http://www.oracle.com/technetwork/java/javase/downloads/jdk7-downloads-1880260.html

- Oracle JDK 8 も良いらしい(現在は Early Access build)

https://wiki.openjdk.java.net/display/OpenJFX/OpenJFX+on+the+Raspberry+Pi

root@raspberrypi:~# ls hadoop-1.1.2.tar.gz jdk-7u21-linux-arm-sfp.tar.gz run_vncserver root@raspberrypi:~# tar zxf jdk-7u21-linux-arm-sfp.tar.gz -C /opt root@raspberrypi:~# update-alternatives --install "/usr/bin/ja jar jarsigner java javac javadoc javah javap root@raspberrypi:~# update-alternatives --install "/usr/bin/java" "java" "/opt/jdk1.7.0_21/bin/java" 1 root@raspberrypi:~# root@raspberrypi:~# java -version java version "1.7.0_03" OpenJDK Runtime Environment (IcedTea7 2.1.7) (7u3-2.1.7-1) OpenJDK Zero VM (build 22.0-b10, mixed mode) root@raspberrypi:~#

ユーザ作成

root@raspberrypi:~# addgroup hadoop root@raspberrypi:~# adduser --ingroup hadoop hduser root@raspberrypi:~# adduser hduser

SSH ノンパス設定(ローカルホスト)

root@raspberrypi:~# su - hduser hduser@raspberrypi ~ $ hduser@raspberrypi ~ $ ssh-keygen -t rsa -P "" Generating public/private rsa key pair. Enter file in which to save the key (/home/hduser/.ssh/id_rsa): Created directory '/home/hduser/.ssh'. Your identification has been saved in /home/hduser/.ssh/id_rsa. Your public key has been saved in /home/hduser/.ssh/id_rsa.pub. The key fingerprint is: 95:5e:cb:32:fc:ef:db:1a:05:ff:4b:b8:47:0c:cb:19 hduser@raspberrypi The key's randomart image is: +--[ RSA 2048]----+ 略 hduser@raspberrypi ~ $ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys hduser@raspberrypi ~ $ ssh localhost The authenticity of host 'localhost (127.0.0.1)' can't be established. ECDSA key fingerprint is 2e:f6:2f:7d:3f:88:30:17:1e:59:39:a4:bc:40:d0:be. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'localhost' (ECDSA) to the list of known hosts. Linux raspberrypi 3.6.11+ #474 PREEMPT Thu Jun 13 17:14:42 BST 2013 armv6l 略

Hadoopインストール・セットアップ

- 環境変数( hduser の .bashrc )

# For Hadoop Environment export JAVA_HOME=/opt/jdk1.7.0_21 export HADOOP_INSTALL=/usr/local/hadoop export PATH=$JAVA_HOME/bin:$HADOOP_INSTALL/bin:$PATH

- Hadoopインストール

root@raspberrypi:~# tar zxf hadoop-1.1.2.tar.gz -C /usr/local root@raspberrypi:~# cd /usr/local/ root@raspberrypi:/usr/local# mv hadoop-1.1.2 hadoop root@raspberrypi:/usr/local# chown -R hduser:hadoop hadoop root@raspberrypi:~# su - hduser hduser@raspberrypi ~ $ hadoop version Hadoop 1.1.2 Subversion https://svn.apache.org/repos/asf/hadoop/common/branches/branch-1.1 -r 1440782 Compiled by hortonfo on Thu Jan 31 02:03:24 UTC 2013 From source with checksum c720ddcf4b926991de7467d253a79b8b hduser@raspberrypi ~ $

- Hadoop 最小設定

# cd /usr/local/hadoop/conf

core-site.xml

<configuration> <property> <name>hadoop.tmp.dir</name> <value>/usr/local/fs/hadoop/tmp</value> </property> <property> <name>fs.default.name</name> <value>hdfs://localhost:54310</value> </property> </configuration>

hdfs-site.xml

<configuration> <property> <name>dfs.replication</name> <value>1</value> </property> </configuration>

mapred-site.xml

<configuration> <property> <name>mapred.job.tracker</name> <value>localhost:54311</value> </property> </configuration>

環境補足

root@raspberrypi:/usr/local# mkdir -p /usr/local/fs/hadoop/tmp root@raspberrypi:/usr/local# chown hduser:hadoop /usr/local/fs/hadoop/tmp root@raspberrypi:/usr/local# chmod 750 /usr/local/fs/hadoop/tmp

DFSフォーマット

hduser@raspberrypi ~ $ hadoop namenode -format 13/08/04 10:41:42 INFO namenode.NameNode: STARTUP_MSG: /************************************************************ STARTUP_MSG: Starting NameNode STARTUP_MSG: host = raspberrypi/127.0.1.1 STARTUP_MSG: args = [-format] STARTUP_MSG: version = 1.1.2 STARTUP_MSG: build = https://svn.apache.org/repos/asf/hadoop/common/branches/branch-1.1 -r 1440782; compiled by 'hortonfo' on Thu Jan 31 02:03:24 UTC 2013 ************************************************************/ 13/08/04 10:41:45 INFO util.GSet: VM type = 32-bit 13/08/04 10:41:45 INFO util.GSet: 2% max memory = 19.335 MB 13/08/04 10:41:45 INFO util.GSet: capacity = 2^22 = 4194304 entries 13/08/04 10:41:45 INFO util.GSet: recommended=4194304, actual=4194304 13/08/04 10:41:48 INFO namenode.FSNamesystem: fsOwner=hduser 13/08/04 10:41:49 INFO namenode.FSNamesystem: supergroup=supergroup 13/08/04 10:41:49 INFO namenode.FSNamesystem: isPermissionEnabled=true 13/08/04 10:41:49 INFO namenode.FSNamesystem: dfs.block.invalidate.limit=100 13/08/04 10:41:49 INFO namenode.FSNamesystem: isAccessTokenEnabled=false accessKeyUpdateInterval=0 min(s), accessTokenLifetime=0 min(s) 13/08/04 10:41:49 INFO namenode.NameNode: Caching file names occuring more than 10 times 13/08/04 10:41:51 INFO common.Storage: Image file of size 112 saved in 0 seconds. 13/08/04 10:41:52 INFO namenode.FSEditLog: closing edit log: position=4, editlog=/usr/local/fs/hadoop/tmp/dfs/name/current/edits 13/08/04 10:41:52 INFO namenode.FSEditLog: close success: truncate to 4, editlog=/usr/local/fs/hadoop/tmp/dfs/name/current/edits 13/08/04 10:41:53 INFO common.Storage: Storage directory /usr/local/fs/hadoop/tmp/dfs/name has been successfully formatted. 13/08/04 10:41:53 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at raspberrypi/127.0.1.1 ************************************************************/

- Hadoop 起動

hduser@raspberrypi /usr/local/hadoop/bin $ ./start-all.sh starting namenode, logging to /usr/local/hadoop/libexec/../logs/hadoop-hduser-namenode-raspberrypi.out localhost: starting datanode, logging to /usr/local/hadoop/libexec/../logs/hadoop-hduser-datanode-raspberrypi.out localhost: starting secondarynamenode, logging to /usr/local/hadoop/libexec/../logs/hadoop-hduser-secondarynamenode-raspberrypi.out starting jobtracker, logging to /usr/local/hadoop/libexec/../logs/hadoop-hduser-jobtracker-raspberrypi.out localhost: starting tasktracker, logging to /usr/local/hadoop/libexec/../logs/hadoop-hduser-tasktracker-raspberrypi.out hduser@raspberrypi /usr/local/hadoop/bin $ hduser@raspberrypi /usr/local/hadoop/bin $ jps 5660 JobTracker 5586 SecondaryNameNode 5866 Jps 5372 NameNode 5770 TaskTracker hduser@raspberrypi /usr/local/hadoop/bin $

*注意:start-allでNameNode、JobTrackerは起動したが、DataNode、TaskTrackerがコケた「Error: JAVA_HOME is not set.」と出たので、/usr/local/hadoop/conf/hadoop-env.sh の JAVA_HOMEを追加した

export JAVA_HOME=/opt/jdk1.7.0_21

*注意:SecondaryNameNode いらないので以下をコメントアウト

start-dfs.sh:"$bin"/hadoop-daemons.sh --config $HADOOP_CONF_DIR --hosts masters start secondarynamenode stop-dfs.sh:"$bin"/hadoop-daemons.sh --config $HADOOP_CONF_DIR --hosts masters stop secondarynamenode

*注意:Oracle JDK 7 (ARM Soft Float ABI 用)を使うとき、DataNodeはクライアントモードで起動する必要がある

これを修正しないとデーモンは起動するが、DataNodeが正しく動かないためJobがコケる

bin/hadoop ファイルの以下を編集( -server を消す)

#HADOOP_OPTS="$HADOOP_OPTS -server $HADOOP_DATANODE_OPTS" HADOOP_OPTS="$HADOOP_OPTS $HADOOP_DATANODE_OPTS"

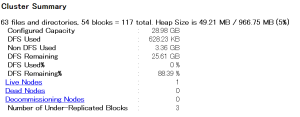

Oracle JDK 8はServerを(まだ?)サポートしていないようだが、7もおそらく。なお、Serverモードだと50070にアクセスしたときに、「Configured Capacity」や「DFS Used」などが「0」になっており、きちんとDataNode部分が動いていない事を確認、直したら以下のように動いた

- Hadoop 停止

hduser@raspberrypi /usr/local/hadoop/bin $ ./stop-all.sh stopping jobtracker localhost: stopping tasktracker stopping namenode localhost: stopping datanode hduser@raspberrypi /usr/local/hadoop/bin $ jps 3035 Jps hduser@raspberrypi /usr/local/hadoop/bin $

はじめまして、cubieboardコミュニティの管理人innと申します。

ブログを拝見させていただき、大変勉強になります。

私たちはARMボードでの分散処理などについて興味があります。

弊社のCubieboardでもHadoopの実行が成功しました。ご興味がございましたら、

ぜひcubieboardコミュニティサイト:http://cubieboard.jp/

をご確認ください。

以上、よろしくお願いします。